Quick Summary

The MCP Model Context Protocol (MCP) is changing how AI applications connect, share context, and scale. By standardizing integrations, boosting security, and enabling seamless collaboration between tools, MCP sets the stage for smarter, future-proof AI ecosystems much like APIs did for the web decades ago.

If you’ve been keeping an eye on the rapid evolution of AI, you’ve probably noticed a new buzzword floating around: MCP Model Context Protocol. Sounds fancy, right? But what does it actually mean, and why is everyone from OpenAI to DeepMind paying attention to it?

Let’s break it down together, no jargon overload, no boring lectures. Just a friendly deep dive into what MCP is, why it matters, and how it could change the way we build and use AI applications.

What is the Model Context Protocol (MCP)?

Okay, let’s start simple.

Imagine you’re talking to an AI assistant. You ask it to summarize a PDF, pull data from your company’s CRM, and then send the results to Slack. Traditionally, the AI has to be hardwired into all those tools, not very flexible, right?

This is where the MCP Model Context Protocol comes in. At its core, MCP is like a universal translator for AI tools and applications. It sets a common language so models, apps, and services can talk to each other without confusion. Instead of manually building hundreds of custom integrations, developers can just plug into the MCP architecture and instantly unlock interoperability.

Think of it like the USB standard. Before USB, connecting devices was a mess. Now, you can plug almost anything into a USB port, and it just works. MCP is doing that for AI applications. So, when someone asks, “What is MCP?” or “What does MCP mean in AI?” The short answer is: it’s a protocol that standardizes how AI models connect, share context, and perform tasks across different applications.

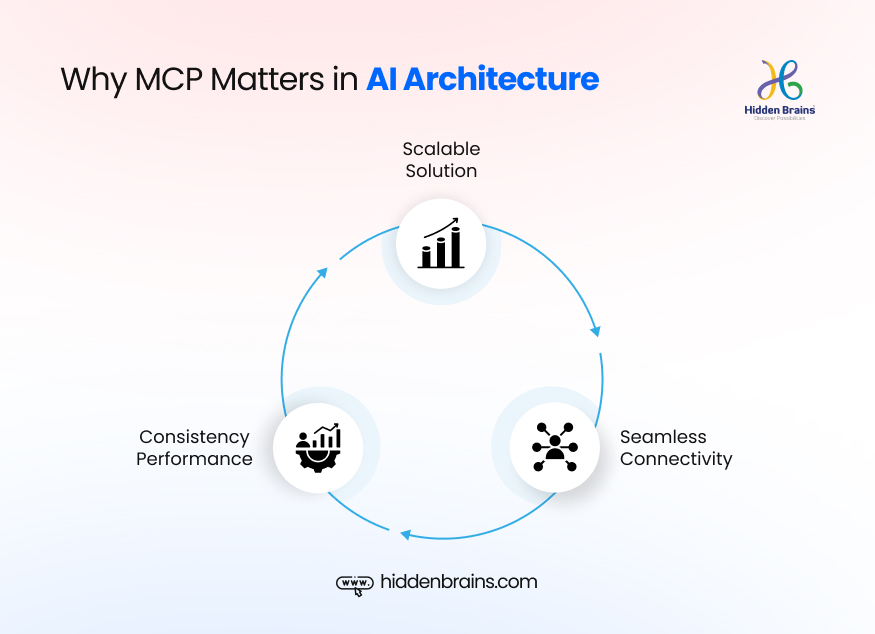

Why MCP Matters in AI Architecture

Now that you know the basics, let’s talk about the “why.”

AI models are powerful, but they’re also limited by the tools they can access. If the model can’t easily reach data or take actions, its usefulness shrinks. That’s why MCP benefits both developers and end-users

Seamless Connectivity

No more clunky patchwork integrations. Think of MCP as the universal plug for custom AI development services. Instead of messy, one-off integrations that break with every update, MCP creates a smooth bridge between AI models and external tools. This makes workflows faster, cleaner, and far more reliable for both developers and businesses.

Consistency

Everyone speaks the same “protocol” language. Without a shared protocol, every integration feels like reinventing the wheel. MCP solves this by setting a common “language” for communication between AI and applications. Developers save time, apps work better together, and end-users get a consistent, frustration-free experience, no matter what platform they’re using.

Scalability

Build once, use everywhere. Scaling usually means rewriting or redesigning connections over and over. With MCP, you build once and extend everywhere. It allows businesses to grow their capabilities without hitting integration roadblocks with AI integration services, making innovation faster and more cost-effective while future-proofing systems for what’s next.

In short, MCP architecture makes AI systems more adaptive, less fragile, and future-proof.

MCP vs Function Calling in LLM Integrations

You might be thinking: “Wait… isn’t this just like function calling in large language models (LLMs)?”

Good question. Let’s compare.

- Function Calling: Imagine giving your AI a cookbook full of recipes (functions). If it wants to bake a cake, it finds the right recipe and follows it.

- MCP Model Context Protocol: Instead of just following recipes, MCP sets up the whole kitchen. It ensures the oven, fridge, and mixer can all talk to each other seamlessly.

Function calling is powerful but narrow. MCP is broad and holistic; it handles not just the calls but also the context, security, and communication between multiple apps.

So, when we compare MCP vs function calling, the key takeaway is: Function calling is a feature. MCP is the ecosystem.

Security Risks & Mitigation in MCP Implementations

Alright, let’s address the elephant in the room. Any time you open up a universal connector, you’re also opening the door to potential risks.

Common MCP Security Risks

- Unauthorized access: If not implemented correctly, someone could trick the AI into accessing sensitive systems. If MCP isn’t set up with strict controls, bad actors could trick the AI into tapping into sensitive apps or systems. This risk is real because the AI is only as secure as the gates it’s guarding.

- Data leakage: AI could accidentally share information between apps that shouldn’t talk. AI is great at connecting systems, but without clear boundaries, it might accidentally pass information between apps that should stay separate. Imagine your HR data flowing into your analytics tool unintentionally, not good.

- Protocol misuse: Malicious actors could exploit vulnerabilities in the MCP core set of rules. The MCP core set defines how data flows. If vulnerabilities exist, malicious actors might exploit them, injecting fake commands or manipulating workflows. It’s like hacking the rules of the conversation itself.

But don’t worry, there are ways to mitigate these risks.

Mitigation Strategies

- Authentication layers

Every MCP application should use strong authentication before exchanging data. The first line of defense is making sure every interaction is verified. Strong authentication ensures only trusted users and systems can exchange data through MCP, reducing the risk of impersonation or fake requests. - Scoped permissions

Just like giving apps on your phone permissions (camera, contacts, etc.), AI apps using MCP should only get what they need. Instead of giving AI applications blanket access, limit permissions to only what’s necessary. Just like you wouldn’t give every mobile app access to your photos, AI should only get the exact data it needs. - Audit logs

Always know who accessed what and when. Keeping track of who did what and when helps detect unusual activity quickly. Audit logs don’t just build accountability; they’re a safety net for identifying breaches and improving security policies over time.

Think of it like this: Just because USB made it easy to plug in devices didn’t mean you wanted a random flash drive infecting your laptop. The same goes for MCP; convenience plus security is the winning combo.

Real-world Use Cases

This isn’t just theory. MCP applications are already showing up in the wild. AI development company globally are already using it.

OpenAI

OpenAI’s early plugin experiments were more than just add-ons; they laid the foundation for MCP thinking. Rather than building one-off, fragile integrations, OpenAI has been moving toward standardized, secure ways for models to connect with tools. MCP is simply the natural evolution of that direction.

DeepMind

Known for pushing boundaries in AI architecture, they’re exploring how MCP can allow models to draw from multiple tools and contexts at once. DeepMind has always focused on pushing AI architecture forward, and MCP fits perfectly into that mission. By allowing models to pull knowledge and actions from multiple tools at once, MCP creates richer, more context-aware AI systems, something DeepMind is deeply invested in exploring for advanced applications.

Enterprise AI platforms

In industries like finance, healthcare, and enterprise IT, MCP is becoming a game-changer. Instead of juggling dozens of disconnected integrations, businesses can let AI access databases, CRMs, and communication platforms through one unified protocol. This not only streamlines operations but also improves security and consistency across tools.

How to Implement MCP: Integration Strategies with SDKs

Implementing MCP doesn’t mean reinventing the wheel. There are already SDKs (software development kits) and libraries designed to help you connect your applications using the model context protocol architecture.

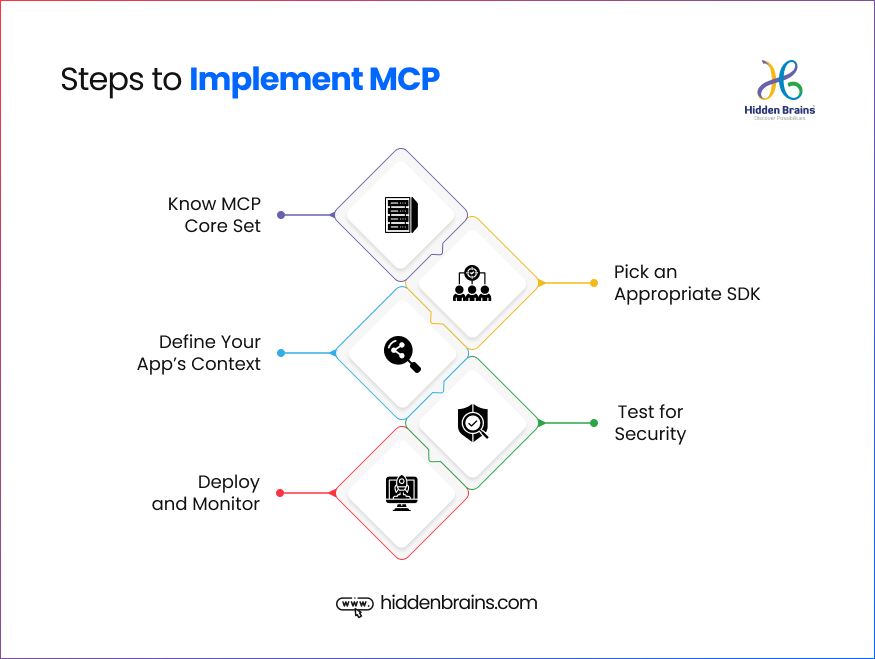

Steps to Get Started

Understand the MCP Core Set

Choose the right SDK that fits your tech stack. Whether you’re working in Python, Node.js, or another language, SDKs make implementation easier by handling much of the heavy lifting. This step ensures you can focus on functionality instead of reinventing the technical plumbing.

Define Your App’s Context

Your application needs to decide what it will “offer” to the AI: data, tools, or actions. For example, a CRM might expose customer details while a healthcare app might share patient data (securely). This context is what allows the AI to interact meaningfully with your app.

Test for Security

Before rolling anything out, make sure your MCP implementation has security baked in. Validate permissions, control what the AI can access, and prevent unnecessary data sharing. Just like you wouldn’t give every app on your phone all permissions, keep MCP access tightly scoped and monitored.

Deploy and Monitor

Once live, it’s time to watch how your integration behaves in the real world. Monitor logs, track performance, and refine context definitions if needed. Staying proactive ensures your AI tools stay efficient, secure, and scalable as usage grows.

Frequently Asked Questions

What is the MCP Model Context Protocol in AI?

The MCP Model Context Protocol (MCP) is a new standard that defines how AI models, tools, and applications share context with each other. In simple terms, it acts like a universal translator that allows different systems to talk in the same language. Instead of building one-off integrations for every app, MCP provides a common framework, making it easier, faster, and safer to connect AI with the tools we use every day.

How does MCP improve AI applications?

AI applications become far more powerful when they’re not limited to siloed environments. MCP improves them by:

– Giving AI models a consistent way to access and exchange information.

– Reducing the friction in connecting with external apps, data sources, and APIs.

– Ensuring those connections remain secure and auditable.

Think of it like moving from old-school USB drives to cloud storage; it just makes everything more seamless, scalable, and reliable.

What are the key MCP benefits?

The MCP benefits go beyond convenience. Some of the biggest advantages include:

Simplified integrations – No more custom coding for every connection.

Stronger security – With authentication, scoped permissions, and logging built in.

Faster scalability – Easily extend AI to new apps as business needs grow.

Future-proofing – Standardization means your AI won’t get locked into outdated architectures.

In short, MCP lets AI evolve with your business instead of holding it back.

What is the MCP architecture?

The Model Context Protocol architecture is built around the MCP core set, a foundational set of rules that define how context (like data, requests, and actions) flows between models and applications. It’s structured in a way that supports:

– Context-sharing between multiple AI agents.

– Secure, permission-based command execution.

– Extensibility for future applications and frameworks.

So, the architecture isn’t just technical plumbing; it’s the backbone that makes a connected AI ecosystem possible.

What are the MCP security risks?

Like any powerful system, MCP comes with potential security risks, such as:

– Unauthorized access occurs if authentication isn’t handled properly.

– Data leakage if the sensitive context isn’t scoped or encrypted.

– Protocol misuse occurs if malicious actors exploit poorly configured integrations.

Mitigation strategies include robust authentication, role-based permissions, encryption, and audit logs. In fact, one of the strengths of MCP is that it bakes many of these protections directly into its design.

How do I implement MCP in my application?

Implementing MCP doesn’t need to be overwhelming. The steps usually include:

– Start with the official SDKs and libraries that make setup easier.

– Define your application’s “context” (the data and operations your AI needs).

– Integrate gradually, beginning with your most critical tools.

– Add strong security checks like authentication and logging.

Done right, you can roll out MCP in phases and avoid the “big bang” migration that often derails AI projects.

MCP vs Function Calling: What’s the difference?

This is one of the most common questions. Here’s the simple breakdown:

– Function Calling: Let’s AI call specific functions you define. Useful, but limited to what’s hard-coded.

– MCP: Provides a full protocol where AI can securely interact with many apps, tools, and data sources in a standardized way.

So, if function calling is like asking your phone’s assistant to “set a timer,” MCP is like giving that assistant access to your calendar, Slack, CRM, and analytics; all with proper rules and security.

What companies are using MCP?

Big names are already testing and adopting MCP. OpenAI has rolled it out for expanding ChatGPT’s tool ecosystem, DeepMind is exploring it for agent coordination, and enterprises in finance, healthcare, and productivity platforms are experimenting with it for real-world use cases. The adoption curve looks a lot like how APIs spread a decade ago, starting small, then becoming a standard.

Is MCP the future of AI integrations?

Most experts think so. The way APIs standardized the web, MCP is shaping up to standardize how AI systems integrate with the digital world. It reduces fragmentation, boosts security, and gives developers a “common language” to build on. As AI becomes more embedded into business operations, protocols like MCP may very well define the next era of connected intelligence.

Conclusion

So, what’s the big picture?

The MCP Model Context Protocol isn’t just another tech acronym. It’s a shift in how AI applications are built and scaled. By standardizing communication, MCP makes AI smarter, safer, and more connected.

Looking ahead:

1. Expect MCP to become as common as APIs are today.

2. Enterprises will adopt it to cut integration costs.

3. AI apps will feel less like isolated tools and more like parts of a unified ecosystem.

We’re not just building better models; we’re building better ways for those models to work with the world. And MCP might just be the quiet hero behind the next big wave of AI innovation.

![Sales & Distribution [Oil & Gas] Sales & Distribution [Oil & Gas]](https://www.hiddenbrains.com/blog/wp-content/themes/blankslate/assets/images/sales_and_distribution-icon.74d08193.svg)

![Fluid Terminal Management [Oil & Gas] Fluid Terminal Management [Oil & Gas]](https://www.hiddenbrains.com/blog/wp-content/themes/blankslate/assets/images/fluid_terminal_management-icon.4b3a27a4.svg)

![Sales & Distribution [Oil & Gas] Sales & Distribution [Oil & Gas]](https://www.hiddenbrains.com/blog/wp-content/themes/blankslate/assets/images/sales_and_distribution-icon.74d08193.svg?1.0.0)

![Fluid Terminal Management [Oil & Gas] Fluid Terminal Management [Oil & Gas]](https://www.hiddenbrains.com/blog/wp-content/themes/blankslate/assets/images/fluid_terminal_management-icon.4b3a27a4.svg?1.0.0)