Summary

AI pilot programs often fail not because of technology, but due to weak strategy, unclear KPIs, and poor execution. This blog explains how enterprises can design AI pilot program that deliver real business impact. It covers selecting high-value use cases, aligning pilots with business goals, defining success metrics, and scaling responsibly. With real-world examples and practical steps, it shows how disciplined AI pilot programs move from experiments to measurable ROI.

AI pilot programs are everywhere. Business impact is not. Across enterprises, leaders approve AI pilot programs with optimism. Teams build models. Dashboards look impressive. Demos go well. Then nothing scales.

The hard truth is simple. Most AI pilot programs never move the P&L. A 2025 MIT study found that 95% of GenAI pilot programs deliver no measurable impact on the P&L, while only 5% drive meaningful revenue or cost acceleration. The issue is not technology. It is execution, alignment, and discipline.

Successful companies treat AI pilot programs very differently. They do not experiment for learning alone. They will pilot to decide.

This blog explains how.

What is an AI Pilot Program?

An AI pilot program is not just an AI proof of concept. A proof of concept answers one question: can this work technically?

An AI pilot program answers a more important question: Should we invest further?

That distinction matters. An AI pilot program is a controlled business experiment. It is designed to reduce uncertainty. It validates impact, risk, and scalability before enterprise rollout.

Strong pilots test three things simultaneously:

- Business value

- Operational fit

- Organizational readiness

If any one fails, scaling fails. That is why pilots should never be owned only by data teams. They must sit at the intersection of business, technology, and operations.

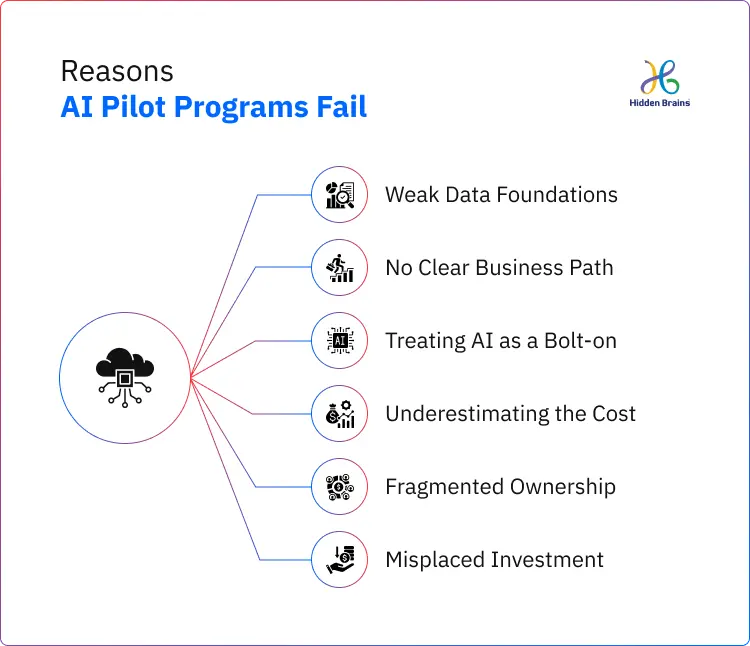

Why Most AI pilot programs Fail to Deliver ROI

Most AI pilot programs do not fail loudly. They fade quietly. They launch with excitement. They show early promise. Then they stall in review meetings, budget cycles, and scaling debates.

The uncomfortable reality is this. Failure is rarely about model accuracy. Enterprises face a challenge in using a pre-built model that does not suffice their needs. With custom AI development services from Hidden Brains, enterprises can get custom models instead of generic ones that work to solve their operational challenges and deliver quick impact.

Yet 95% of AI pilot programs never create P&L impact. The gap is not technical. It is structural, operational, and strategic.

Here are the real reasons AI pilot programs fail to deliver ROI.

1. Weak Data Foundations Undermine Trust Early

AI is only as strong as the data beneath it. Most pilots start with optimism and end with skepticism because the data is

- Incomplete

- Inconsistent

- Poorly labeled

- Siloed across systems

Models may technically work. Outputs may look impressive. But business users do not trust the results. When training data is messy or biased, AI outputs feel unreliable. Decisions revert to manual judgment. Adoption stalls. ROI disappears.

Strong pilots begin with data readiness assessments. Weak pilots discover data issues after deployment, when it is already too late.

2. No Clear Business Problem or KPI

Many pilot programs are launched for the wrong reason. They exist because AI is available. Not because a business problem demands it.

These efforts often show up as:

- Generic chatbots

- Auto-summarization tools

- “Smart” dashboards with no decision owner

They sound innovative. But they rarely move the needle. Without a clear link to revenue, cost, risk, or customer experience, executives struggle to justify continued investment. Pilots survive on enthusiasm, not evidence.

Successful AI pilot programs are anchored to one hard question:What measurable business outcome will this improve? If that answer is unclear, ROI will be too.

3. Treating AI as a Bolt-on, Not a Workflow Change

This is one of the most common and costly mistakes. AI pilot programs often run in clean, controlled environments. They are isolated from real workflows. They bypass legacy systems, approvals, and constraints.

MIT’s research shows the core issue is not model quality but flawed enterprise integration. AI creates value only when it operates inside living systems- with approvals, handoffs, and real decision flows where people act and decide. When pilots run outside these systems, they remain theoretical. Models may perform well, but outcomes do not. Real impact comes from embedding AI directly into human decision loops as part of everyday work, not as a bolt-on tool.

When AI is bolted on instead of embedded, users ignore it. The pilot technically succeeds. The business outcome does not. Real ROI comes from redesigning workflows, not layering tools on top.

4. Underestimating Production, Infrastructure, and Governance Costs

Pilots hide the true cost of AI. In pilot mode, systems are light. Security is relaxed. Monitoring is minimal. Compliance is often deferred.

The AI pilot program to production changes everything. Suddenly, organizations must account for:

- Model monitoring and drift detection

- Data security and access controls

- Regulatory compliance

- Retraining pipelines

- AI Integration solutions with core systems

This is where many AI pilot programs flip from positive to negative ROI. Enterprise analysis shows that while over 80% of pilots appear successful, less than 50% retain ROI in production due to these hidden costs.

A strong AI adoption strategy accounts for this upfront. Weak ones discover it during scaling and stall.

5. Fragmented Ownership and the Organizational “Learning Gap”

AI pilot programs often suffer from shared responsibility.

No single owner. No clear accountability. No sustained capability building. MIT describes this as a “learning gap.”

Organizations adopt GenAI tools. But they do not invest in:

- Skills

- Change management

- Operating models

- Governance

The result is a growing divide.

About 5% of companies turn pilots into transformation. The rest remain stuck in perpetual experimentation.

AI pilot programs succeed when ownership is clear and learning compounds. They fail when responsibility is fragmented, and knowledge resets every cycle.

6. Misplaced Investment and Poor Use-case Selection

Enterprise AI budgets are often skewed. Sales and marketing pilots get funded first. Operations and finance are treated as secondary.

This is backward. Operational and financial use cases like fraud detection, forecasting, pricing, and compliance often offer:

- Cleaner data

- Repeatable processes

- Clear KPIs

- Faster ROI

When organizations chase visibility over value, pilots struggle to justify scale. High-impact AI pilot programs prioritize economic clarity over excitement.

Choosing High-impact, Realistic Use Cases

Every successful AI pilot program starts with a real business problem. Not a curiosity. Not a future idea. A pain that already costs money.

High-impact use cases share four traits:

- Clear economic value

- Existing, reliable data

- Repeatable processes

- A business owner who feels the pain

Let’s look at what this means in practice.

Use Case 1: Fraud Detection (Risk Reduction)

Fraud detection is a classic high-impact AI pilot program.

Why?

Because the value is immediate. And the metrics are hard to argue with.

Financial institutions process millions of transactions every day. Every delay increases exposure. Every false positive frustrates customers. AI pilot programs in fraud focus on one goal. Detect risk earlier. Act faster. Cyber frauds are one of the prominent cyber threats enterprises face today. You can also explore the free cybersecurity checklist to protect your business in this digital era.

Real pilot example

Mastercard’s Decision Intelligence platform ran an AI pilot program to improve real-time fraud detection across its global payment network.

The pilot analyzed up to 159 billion transactions annually, scoring each transaction in under 50 milliseconds.

Results from the pilot phase:

- 300% increase in fraud detection rates.

- 85% reduction in false positives.

- Significant reduction in prevention of fraud losses and charge back costs.

The outcome was clear. Higher protection. Lower friction. Better trust.

Why this pilot worked

Several fundamentals were in place from day one:

- Rich, real-time transaction history data at massive scale.

- Mature and standardized payment processing workflows.

- Strong C-suite sponsorship from risk, compliance, and finance leadership.

- Clear KPIs tied to detection uplift, false positive reduction, and ROI.

This is what a strong AI pilot-to-production pathway looks like. The business case was proven early. Risk was quantified. Scaling was justified. Value came first. Technology followed.

Turn Your AI Pilots Into Measurable Business ROI.

Talk to Us

Use Case 2: Legal Research Automation (Efficiency & Accuracy)

Legal research and document analysis can consume enormous time. Lawyers and paralegals spend hours manually reviewing case files. It slows case preparation, raises costs, and drains capacity.

AI proof of concepts that automate legal research target high-impact outcomes. They improve speed, consistency, and insight quality. And they deliver measurable business value.

Real pilot example

InstaLegalAI, an AI-powered legal research and analysis platform, was piloted with a major U.S.-based law firm to transform how legal professionals work. The platform leverages advanced NLP and machine learning to automate document review, extract key facts, and surface relevant case law and arguments.

Impact of this pilot:

- 60% faster legal document review than manual processes

- 50% reduction in overall case preparation time.

- 40% increase in legal accuracy and insight relevance.

- Lawyers could focus more on strategy, not sifting papers.

The impact was both operational and strategic. Time-intensive tasks shrank dramatically. Lawyers gained confidence in outputs. Client delivery accelerated.

Use Case 3: Customer Support Automation (Cost + Experience)

Customer support is another pilot-friendly AI adoption strategy. High volume. Repeatable queries. Clear metrics.

AI chatbots and virtual agents now handle routine questions, allowing human agents to focus on complex issues.

Enterprise examples

- Teladoc Health piloted Microsoft 365 Copilot to automate administrative tasks in telehealth workflows. Agent productivity increased without compromising care quality.

- Virtual Dental Care used Azure-based AI to reduce mobile clinic paperwork by 75%, freeing clinicians to spend more time with patients.

Industry-wide results from 2025 AI service deployments:

- 87% faster resolution times

- 12% increase in CSAT

- 80% of routine queries are handled by AI

Why these pilots succeeded:

- CRM and ticketing data were ready.

- Support workflows were mature.

- Product and customer success leaders owned outcomes.

- KPIs tied to resolution time, cost per ticket, and CSAT.

The AI customer service market is projected to reach $47.8B by 2030, growing at 25.8% CAGR. But only disciplined AI pilot programs capture this upside.

Aligning AI pilot programs With Business Goals and KPIs

This is where most pilots fail. Teams define success vaguely. Executives expect clarity.

Every AI pilot program should map to one primary business goal:

- Reduce cost

- Increase revenue

- Lower risk

- Improve speed

Then define:

- Baseline metrics

- Target improvement

- Decision thresholds

For example:

- Reduce fraud losses by 20% within 60 days.

- Cut average ticket resolution time by 50%.

- Improve forecast accuracy by 25%.

Set go/no-go criteria upfront. If targets are not met, stop. If they are met, scale with confidence. This discipline separates pilots from experiments. The following steps counter the 95% failure rate of pilots that lacks clear ROI vision.

Step 1: Define Business Objectives before AI Enters the Room

Start with strategy. Not technology.

Every AI pilot program should map to one of the company’s top priorities:

- Revenue growth

- Cost reduction

- Risk mitigation

- Customer retention

If a pilot program cannot be clearly tied to one of these, pause it.

This step forces discipline. It filters out curiosity-driven ideas. And it ensures leadership attention from day one.

Step 2: Select and Prioritize High-impact Use Cases

Not all use cases deserve a pilot. Strong candidates share three traits:

- Data is available and reliable.

- The process is mature and repeatable.

- A business owner is accountable for outcomes.

Then apply one more filter. Impact versus complexity. Prioritize use cases where KPI movement is meaningful and implementation friction is manageable. This is how pilots earn momentum instead of resistance.

Step 3: Define Outcome-focused KPIs That Matter

Limit KPIs. But make them sharp. Each AI pilot program should track three to five metrics. No more.

Good KPIs are:

- Specific

- Measurable

- Time-bound

- Directly tied to business value

Examples include:

- Cost per transaction

- Resolution time

- Error or fraud rate

- Revenue leakage

Avoid vanity metrics. Accuracy alone does not justify budgets. Business outcomes do.

Step 4: Assign Clear Ownership and Secure Buy-in Early

Every pilot needs a single owner. Not IT. Not data science. A business leader who feels the pain.

This owner:

- Defines success

- Removes blockers

- Owns scale decisions

Cross-functional buy-in follows naturally when ownership is clear.

Without it, pilots drift. And ROI disappears.

Step 5: Design Pilot Scope Around KPIs, Not Features

Scope should follow metrics. Design the pilot to test exactly what the KPIs measure. Nothing more. Nothing less.

This keeps pilots lean. Timelines are short. And outcomes are unambiguous.

If KPIs move, scale. If they do not, stop. That clarity is what separates pilots from experiments and experiments from impact.

How Hidden Brains Can Help?

With 22+ years of experience, Hidden Brains helps enterprises move from AI proof of concept to production-ready solutions. We design custom AI initiatives aligned to business goals, covering ideation, data readiness, pilots, integration, and governance so AI investments deliver measurable ROI, not experiments.

Move From AI Experiments to Production With Confidence.

Connect NowFrequently Asked Questions

AI pilots raise the right questions. Clear answers turn experimentation into execution. Here are the most common ones leaders ask when scaling AI responsibly.

1. How to scale AI pilot programs successfully?

Scale only after proving business impact. Validate KPIs, data readiness, and workflow integration during the pilot. Plan for production costs, governance, and change management early to protect ROI during expansion.

2. Why do AI pilot programs fail?

Most fail due to weak data, unclear business goals, poor ownership, and hidden production costs. Technical success alone does not guarantee business value or executive confidence.

3. How do companies turn AI experiments into business value?

By tying pilots to real business problems, defining success upfront, assigning ownership, and making clear scale-or-stop decisions based on measurable outcomes.

4. What are the right AI pilot success metrics?

Use outcome-driven metrics. Cost reduction, revenue uplift, risk reduction, time savings, and accuracy improvements tied directly to business KPIs, not model performance alone.

5. What are AI implementation best practices for enterprises?

Start with strategy, validate data early, design pilots around workflows, involve governance teams upfront, and treat AI as an operating model change, not a standalone tool.

6. What is the ideal timeline for an AI pilot program?

Most AI pilot programs should run for 6 to 12 weeks. This is enough time to validate data readiness, measure KPI impact, and test workflow integration. Longer pilots often lose momentum and clarity, making ROI harder to prove.

Conclusion

AI success is not about running more experiments. It is about running the right ones. When AI pilot programs align with business goals, clear KPIs, and disciplined execution, they move beyond hype. They drive decisions. They deliver ROI.

![Sales & Distribution [Oil & Gas] Sales & Distribution [Oil & Gas]](https://www.hiddenbrains.com/blog/wp-content/themes/blankslate/assets/images/sales_and_distribution-icon.74d08193.svg)

![Fluid Terminal Management [Oil & Gas] Fluid Terminal Management [Oil & Gas]](https://www.hiddenbrains.com/blog/wp-content/themes/blankslate/assets/images/fluid_terminal_management-icon.4b3a27a4.svg)

![Sales & Distribution [Oil & Gas] Sales & Distribution [Oil & Gas]](https://www.hiddenbrains.com/blog/wp-content/themes/blankslate/assets/images/sales_and_distribution-icon.74d08193.svg?1.0.0)

![Fluid Terminal Management [Oil & Gas] Fluid Terminal Management [Oil & Gas]](https://www.hiddenbrains.com/blog/wp-content/themes/blankslate/assets/images/fluid_terminal_management-icon.4b3a27a4.svg?1.0.0)